[SPARK-40677][CONNECT][FOLLOWUP] Refactor shade relocation/rename rules#38162

[SPARK-40677][CONNECT][FOLLOWUP] Refactor shade relocation/rename rules#38162LuciferYang wants to merge 11 commits intoapache:masterfrom

relocation/rename rules#38162Conversation

|

Test first |

|

sbt assembly Including follows: |

|

Thanks for doing this! |

|

rebase |

| val cp = (assembly / fullClasspath).value | ||

| cp filter { v => | ||

| val name = v.data.getName | ||

| name.startsWith("pmml-model-") || name.startsWith("scala-collection-compat_") || |

There was a problem hiding this comment.

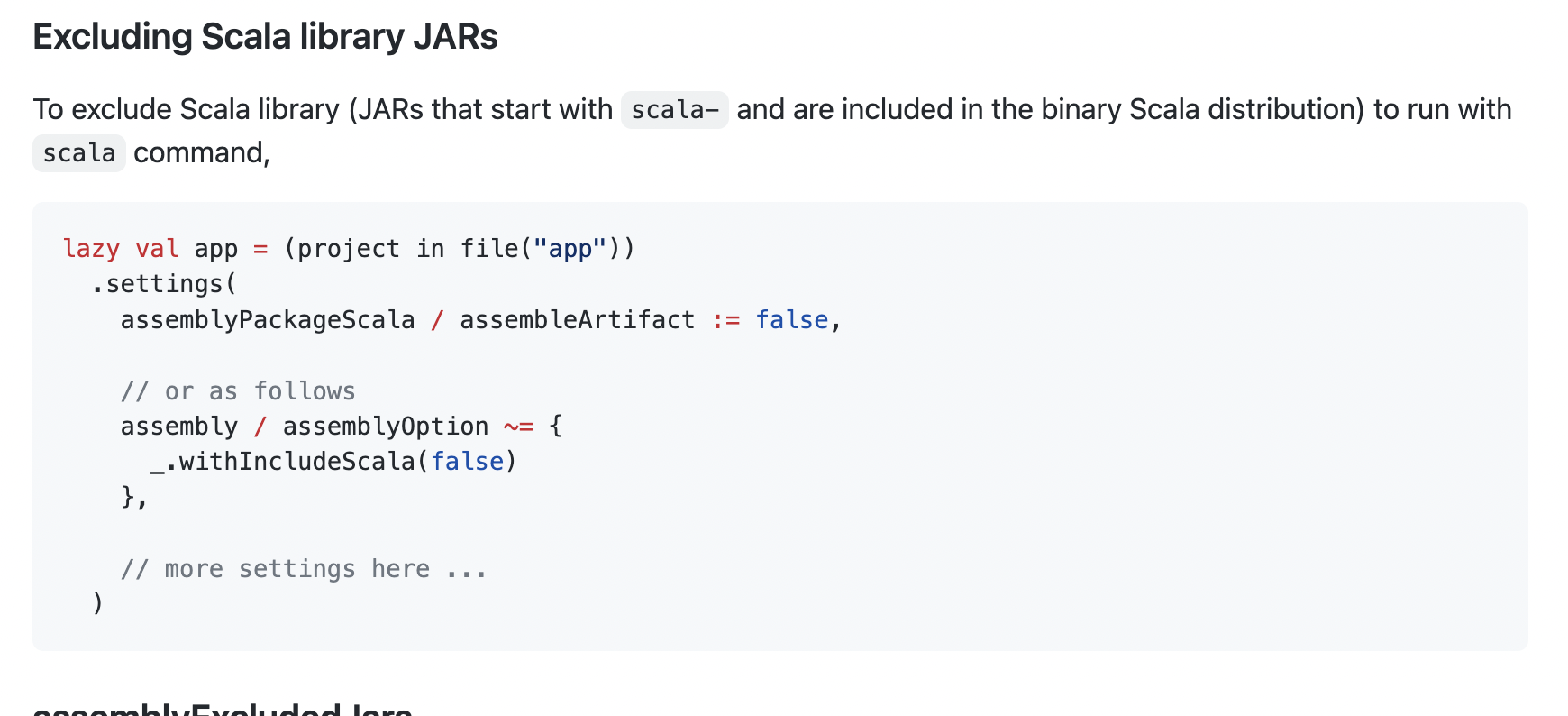

Is scala-collection picked up from Scala library itself? I remember there's an option to exclude this (e.g., includeScala = False)

There was a problem hiding this comment.

should be (assembly / assemblyPackageScala / assembleArtifact) := false, but I found scala-collection was not excluded, let me try

assembly / assemblyOption ~= {

_.withIncludeScala(false)

},

There was a problem hiding this comment.

@HyukjinKwon The results are the same, from the document, it should be this option, but it only excludes the scala-library, not the 'scala-collection compat_'.

|

LGTM otherwise. Mind filling the PR description? |

| ShadeRule.rename("com.google.common.**" -> "org.sparkproject.connect.guava.@1").inAll, | ||

| ShadeRule.rename("com.google.thirdparty.**" -> "org.sparkproject.connect.guava.@1").inAll, | ||

| ShadeRule.rename("com.google.protobuf.**" -> "org.sparkproject.connect.protobuf.@1").inAll, | ||

| ShadeRule.rename("android.annotation.**" -> "org.sparkproject.connect.android_annotation.@1").inAll, |

There was a problem hiding this comment.

just a nit: org.sparkproject -> org.apache.spark.connect.

There was a problem hiding this comment.

No, I think we should follow the existing rules, spark.shade.packageName defined as org.sparkproject on May 8, 2019. If we need to change this rule, it is better to change it uniformly by an independent pr.

| cp filter { v => | ||

| val name = v.data.getName | ||

| name.startsWith("pmml-model-") || name.startsWith("scala-collection-compat_") || | ||

| name.startsWith("jsr305-") || name.startsWith("netty-") || name == "unused-1.0.0.jar" |

There was a problem hiding this comment.

@HyukjinKwon should we share Netty with Spark?

There was a problem hiding this comment.

Maybe we need merge #38185 first, otherwise, netty cannot be filtered out here

|

rebased |

|

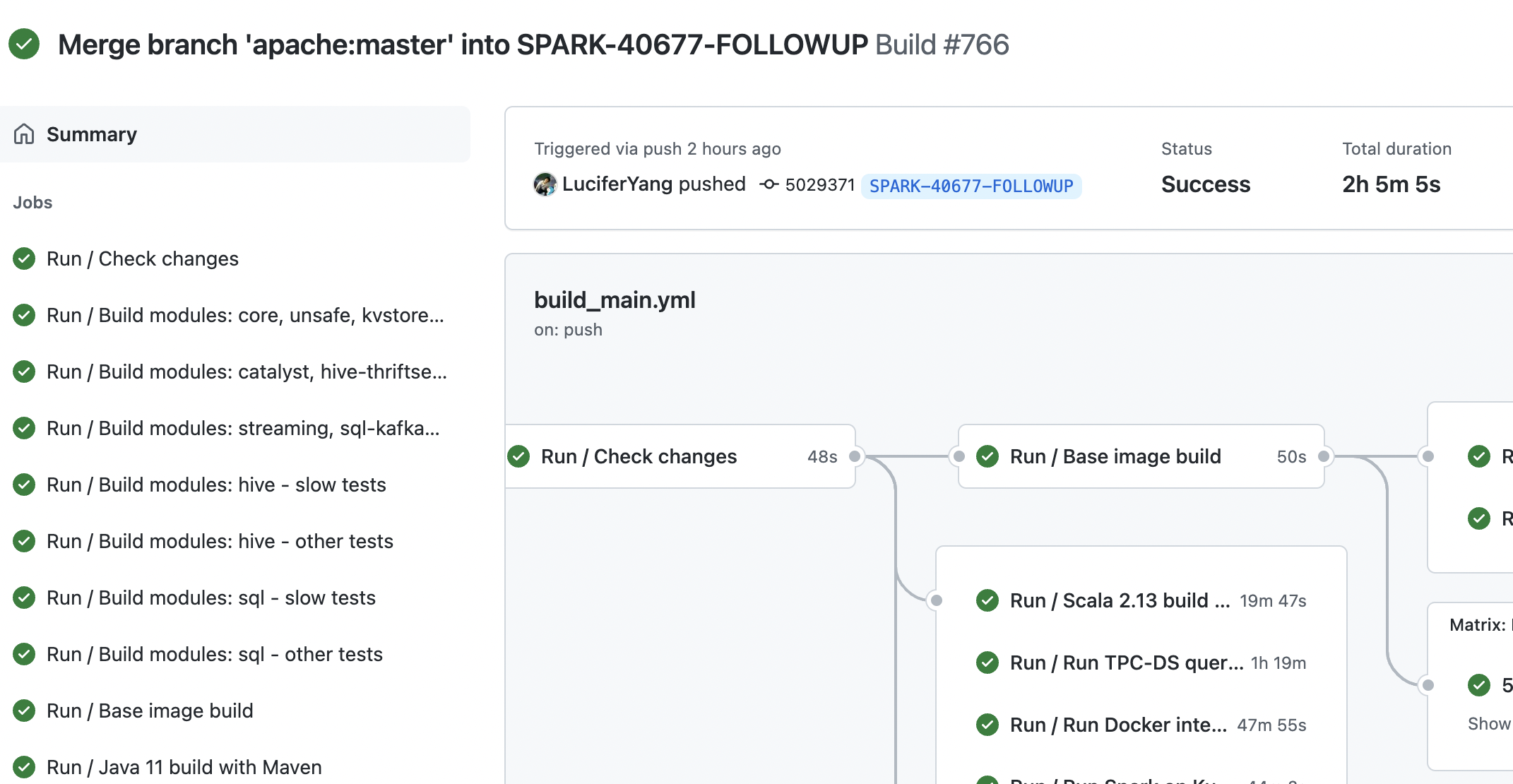

https://github.com/LuciferYang/spark/actions/runs/3219169722 GA passed, status not update |

|

Merged to master. |

|

thanks @HyukjinKwon @amaliujia @grundprinzip |

…ules This main change of this pr is refactor shade relocation/rename rules refer to result of `mvn dependency:tree -pl connector/connect` to ensure that maven and sbt produce assembly jar according to the same rules. The main parts of `mvn dependency:tree -pl connector/connect` result as follows: ``` [INFO] +- com.google.guava:guava:jar:31.0.1-jre:compile [INFO] | +- com.google.guava:listenablefuture:jar:9999.0-empty-to-avoid-conflict-with-guava:compile [INFO] | +- org.checkerframework:checker-qual:jar:3.12.0:compile [INFO] | +- com.google.errorprone:error_prone_annotations:jar:2.7.1:compile [INFO] | \- com.google.j2objc:j2objc-annotations:jar:1.3:compile [INFO] +- com.google.guava:failureaccess:jar:1.0.1:compile [INFO] +- com.google.protobuf:protobuf-java:jar:3.21.1:compile [INFO] +- io.grpc:grpc-netty:jar:1.47.0:compile [INFO] | +- io.grpc:grpc-core:jar:1.47.0:compile [INFO] | | +- com.google.code.gson:gson:jar:2.9.0:runtime [INFO] | | +- com.google.android:annotations:jar:4.1.1.4:runtime [INFO] | | \- org.codehaus.mojo:animal-sniffer-annotations:jar:1.19:runtime [INFO] | +- io.netty:netty-codec-http2:jar:4.1.72.Final:compile [INFO] | | \- io.netty:netty-codec-http:jar:4.1.72.Final:compile [INFO] | +- io.netty:netty-handler-proxy:jar:4.1.72.Final:runtime [INFO] | | \- io.netty:netty-codec-socks:jar:4.1.72.Final:runtime [INFO] | +- io.perfmark:perfmark-api:jar:0.25.0:runtime [INFO] | \- io.netty:netty-transport-native-unix-common:jar:4.1.72.Final:runtime [INFO] +- io.grpc:grpc-protobuf:jar:1.47.0:compile [INFO] | +- io.grpc:grpc-api:jar:1.47.0:compile [INFO] | | \- io.grpc:grpc-context:jar:1.47.0:compile [INFO] | +- com.google.api.grpc:proto-google-common-protos:jar:2.0.1:compile [INFO] | \- io.grpc:grpc-protobuf-lite:jar:1.47.0:compile [INFO] +- io.grpc:grpc-services:jar:1.47.0:compile [INFO] | \- com.google.protobuf:protobuf-java-util:jar:3.19.2:runtime [INFO] +- io.grpc:grpc-stub:jar:1.47.0:compile [INFO] +- org.spark-project.spark:unused:jar:1.0.0:compile ``` The new shade rule excludes the following jar packages: - scala related jars - netty related jars - only sbt inlcude jars before: pmml-model-*.jar, findbugs jsr305-*.jar, spark unused-1.0.0.jar So after this pr maven shade will includes the following jars: ``` [INFO] --- maven-shade-plugin:3.2.4:shade (default) spark-connect_2.12 --- [INFO] Including com.google.guava:guava:jar:31.0.1-jre in the shaded jar. [INFO] Including com.google.guava:listenablefuture:jar:9999.0-empty-to-avoid-conflict-with-guava in the shaded jar. [INFO] Including org.checkerframework:checker-qual:jar:3.12.0 in the shaded jar. [INFO] Including com.google.errorprone:error_prone_annotations:jar:2.7.1 in the shaded jar. [INFO] Including com.google.j2objc:j2objc-annotations:jar:1.3 in the shaded jar. [INFO] Including com.google.guava:failureaccess:jar:1.0.1 in the shaded jar. [INFO] Including com.google.protobuf:protobuf-java:jar:3.21.1 in the shaded jar. [INFO] Including io.grpc:grpc-netty:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-core:jar:1.47.0 in the shaded jar. [INFO] Including com.google.code.gson:gson:jar:2.9.0 in the shaded jar. [INFO] Including com.google.android:annotations:jar:4.1.1.4 in the shaded jar. [INFO] Including org.codehaus.mojo:animal-sniffer-annotations:jar:1.19 in the shaded jar. [INFO] Including io.perfmark:perfmark-api:jar:0.25.0 in the shaded jar. [INFO] Including io.grpc:grpc-protobuf:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-api:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-context:jar:1.47.0 in the shaded jar. [INFO] Including com.google.api.grpc:proto-google-common-protos:jar:2.0.1 in the shaded jar. [INFO] Including io.grpc:grpc-protobuf-lite:jar:1.47.0 in the shaded jar. [INFO] Including io.grpc:grpc-services:jar:1.47.0 in the shaded jar. [INFO] Including com.google.protobuf:protobuf-java-util:jar:3.19.2 in the shaded jar. [INFO] Including io.grpc:grpc-stub:jar:1.47.0 in the shaded jar. ``` sbt assembly will include the following jars: ``` [debug] Including from cache: j2objc-annotations-1.3.jar [debug] Including from cache: guava-31.0.1-jre.jar [debug] Including from cache: protobuf-java-3.21.1.jar [debug] Including from cache: grpc-services-1.47.0.jar [debug] Including from cache: failureaccess-1.0.1.jar [debug] Including from cache: grpc-stub-1.47.0.jar [debug] Including from cache: perfmark-api-0.25.0.jar [debug] Including from cache: annotations-4.1.1.4.jar [debug] Including from cache: listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar [debug] Including from cache: animal-sniffer-annotations-1.19.jar [debug] Including from cache: checker-qual-3.12.0.jar [debug] Including from cache: grpc-netty-1.47.0.jar [debug] Including from cache: grpc-api-1.47.0.jar [debug] Including from cache: grpc-protobuf-lite-1.47.0.jar [debug] Including from cache: grpc-protobuf-1.47.0.jar [debug] Including from cache: grpc-context-1.47.0.jar [debug] Including from cache: grpc-core-1.47.0.jar [debug] Including from cache: protobuf-java-util-3.19.2.jar [debug] Including from cache: error_prone_annotations-2.10.0.jar [debug] Including from cache: gson-2.9.0.jar [debug] Including from cache: proto-google-common-protos-2.0.1.jar ``` All the dependencies mentioned above are relocationed to the `org.sparkproject.connect` package according to the new rules to avoid conflicts with other third-party dependencies. Refactor shade relocation/rename rules to ensure that maven and sbt produce assembly jar according to the same rules. No Pass GitHub Actions Closes apache#38162 from LuciferYang/SPARK-40677-FOLLOWUP. Lead-authored-by: yangjie01 <yangjie01@baidu.com> Co-authored-by: YangJie <yangjie01@baidu.com> Signed-off-by: Hyukjin Kwon <gurwls223@apache.org>

What changes were proposed in this pull request?

This main change of this pr is refactor shade relocation/rename rules refer to result of

mvn dependency:tree -pl connector/connecttoensure that maven and sbt produce assembly jar according to the same rules.

The main parts of

mvn dependency:tree -pl connector/connectresult as follows:The new shade rule excludes the following jar packages:

So after this pr

maven shade will includes the following jars:

sbt assembly will include the following jars:

All the dependencies mentioned above are relocationed to the

org.sparkproject.connectpackage according to the new rules to avoid conflicts with other third-party dependencies.Why are the changes needed?

Refactor shade relocation/rename rules to ensure that maven and sbt produce assembly jar according to the same rules.

Does this PR introduce any user-facing change?

No

How was this patch tested?

Pass GitHub Actions